(Now) Build a GPU Deep Learning environment with GeForce GTX 960

Introduction

I remembered that a GPU (GeForce GTX 960) made by NVIDIA was included in my own desktop PC that I rarely used recently, so I thought about building an environment where deep learning can be done by utilizing this. .. Despite the GPU I bought 5 years ago, the processing speed was extremely fast and I was surprised at how wonderful the GPU was (laughs). msi GeForce GTX960 Gaming 2G MGSV

environment

- Windows 10 Pro (Version 1909)

- Python 3.6.4 (Anaconda3 5.1.0)

Things necessary

--NVIDIA GPU

- Tensorflow-gpu

- Keras

- Microsoft Visual Studio C++ (MSVC)

- NVIDIA Driver

- CUDA

- cuDNN

Version confirmation

To use Tensorflow / Keras in a GPU environment, you have to match each of the above requirements with the corresponding version.

There is a matching table in Tensorflow site, so be sure to check it.

Basically, I think that you should install the corresponding version according to the version of Tensorflow.

If for some reason you do not specify the version, the latest version is OK.

At the time of posting (2020/03/21), 2.0.0 is the latest, so we will build it with 2.0.0.

Create Conda environment & install Tensorflow-gpu

If the CPU version and GPU version of Tensorflow are mixed, it seems that the CPU version is automatically selected, so create a new environment.

cmd

> conda create -n tf200gpu Python=3.6.4

Once created, enable the environment and install Tensorflow-gpu.

cmd

> conda activate tf200gpu

(tf200gpu)> pip install tensorflow-gpu==2.0.0

↓ If you get this error, refer to the following article

ERROR: cannot uninstall 'wrapt'. It is a distutils installed project and thus we cannot accurately determine which files belong to it which would lead to only a partial uninstall.

-Tensorflow install memorandum -63rd day I installed tensorflow.

After the installation is complete, check the following with pip list.

(At this stage, even if you execute import tensorflow in Python, an error will occur because CUDA / cuDNN is not included.)

--Is the CPU version of Tensorflow installed? --Is Tensorflow-gpu installed and version 2.0.0?

Install Keras

I think Keras can be installed without any problems. Version 2.3.1 is included in my environment.

cmd

(tf200gpu)> pip install keras

As with Tensorflow, check if the installation is done with pip list.

Install Visual Studio C ++ 2017

Obtain and install Visual Studio that matches your version of Tensorflow from the Microsoft Download Site (https://visualstudio.microsoft.com/en/vs/older-downloads/).

This time it will be 2017, so install Visual Studio Community 2017.

Check "Desktop development using C ++ workloads" during installation.

(It takes quite a while ...)

Install NVIDIA Driver

Select the product from NVIDIA download site and get the installer. For other graphic boards, change accordingly.

If you do nothing special and hold down "Next", the installation should be completed without any problems.

If you do nothing special and hold down "Next", the installation should be completed without any problems.

CUDA installation

CUDA is a general-purpose parallel computing platform for GPUs developed and provided by NVIDIA.

Obtain and install the installer from the CUDA Toolkit Download Site (https://developer.nvidia.com/cuda-toolkit-archive).

You need to create a free account to get the installer, so make a mess.

This time install CUDA Toolkit 10.0. Select the OS type and version, and select exe (network).

(Select local when installing on a PC that cannot connect to the Internet)

After downloading, start the installer and press and hold "Next" like Driver to install.

cuDNN installation

Next, install cuDNN, a library for deep learning published by NVIDA.

You will also need an account here, so please log in with the account you created when you were in CUDA.

This time, get 7.4.2 for CUDA 10 from cuDNN download site.

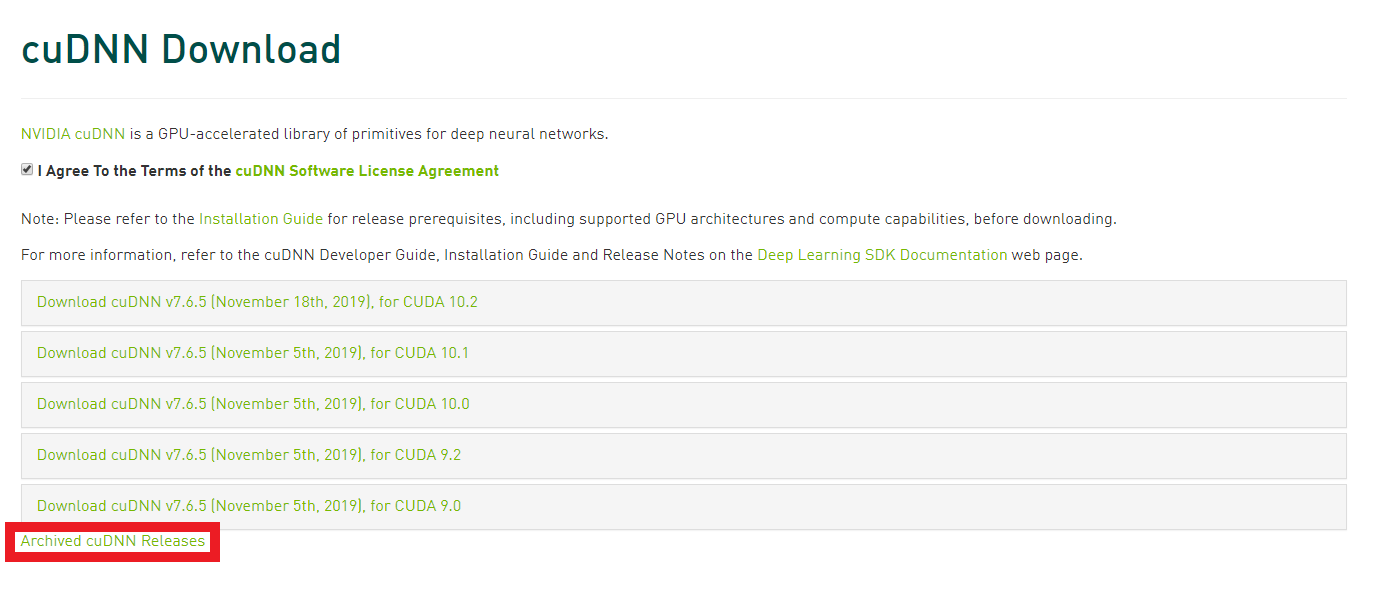

If you check "I Agree To the Terms of the cuDNN Software License Agreement", you will be given several options. If you don't find the version you want, click "Archived cuDNN Releases" in the red frame to see past releases.

When you unzip the downloaded zip, you will find three folders, bin, include, and lib, and a text file called NVIDIA_SLA_cuDNN_Support.txt.

Open

When you unzip the downloaded zip, you will find three folders, bin, include, and lib, and a text file called NVIDIA_SLA_cuDNN_Support.txt.

Open C: \ Program Files \ NVIDIA GPU Computing Toolkit \ CUDA \ v.10.0 in Windows Explorer, and there are bin, include, lib folders in the same way, so in the downloaded folder Installation is completed by copying and pasting the contents into folders with the same name.

You may be asked for administrator privileges, so please allow it.

Check if the GPU is recognized

This completes the installation system. To check if the GPU is recognized properly, try the following at the command prompt.

cmd

(tf200gpu)> python -c "from tensorflow.python.client import device_lib;print(device_lib.list_local_devices())"

It is recognized properly ...! !!

Let's do it!

Then, let's learn using Tensorflow-gpu ... but it was blocked by the following error.

tensorflow.python.framework.errors_impl.UnknownError: Failed to get convolution algorithm. This is probably because cuDNN failed to initialize, so try looking to see if a warning log message was printed above.

When I referred to this article, neither cudatooklit nor cudnn appeared on the conda list ...

So, when I tried `conda install cudnn```, candidates came out, so I will install it. By the way, it seems that you can install both cudatoolkit and cudnn with `conda install cudnn```.

Installed above:

- cudatoolkit 10.2.89

- cudnn 7.6.5

I ran the learning code again and it worked fine on the GPU! !!

I've installed CUDA and cuDNN twice, so I'm not sure if this is the correct method (and the version is different), but it's working for the time being (laughs). I will update the article if I have any problems in the future or find out the correct way! (If you are familiar with this, please let us know in the comments ~ (´; ω; `))

result

Let's measure with the following Python code for learning made by a certain online training program. This is the learning code of the image recognition program that classifies the input image into three classes using CNN. For the image data for learning, load the one saved in advance in .npy format. I tried learning 50 x 50 pixel images with 157 images (halfway), batch size 32, epoch 100 ...

| Processor | Time |

|---|---|

| CPU | 0:01:22.239975 |

| GPU | 0:00:15.542190 |

GPU is about 5.4 times faster, isn't it? (By the way, the installed CPU is Intel Core i5-6600K @ 3.5GHz)

train.py

import keras

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D

from keras.layers import Activation, Dropout, Flatten, Dense

from keras.utils import np_utils

import numpy as np

import datetime

classes = ["class1", "class2", "class3"]

num_classes = len(classes)

image_size = 50

def main():

X_train, X_test, y_train, y_test = np.load("./data.npy", allow_pickle=True)

X_train = X_train.astype("float") / 256

X_test = X_test.astype("float") / 256

y_train = np_utils.to_categorical(y_train, num_classes)

y_test = np_utils.to_categorical(y_test, num_classes)

model = model_train(X_train, y_train)

model_eval(model, X_test, y_test)

def model_train(X, y):

model = Sequential()

model.add(Conv2D(32, (3, 3), padding='same', input_shape=X.shape[1:]))

model.add(Activation('relu'))

model.add(Conv2D(32, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Conv2D(64, (3, 3), padding='same'))

model.add(Activation('relu'))

model.add(Conv2D(64, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(512))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(3))

model.add(Activation('softmax'))

opt = keras.optimizers.adam(lr=0.0001, decay=1e-6)

model.compile(

loss='categorical_crossentropy',

optimizer=opt,

metrics=['accuracy']

)

model.fit(X, y, batch_size=32, epochs=100)

return model

def model_eval(model, X, y):

scores = model.evaluate(X, y, verbose=1)

print('Test Loss: ', scores[0])

print('Test Accuracy: ', scores[1])

if __name__ == "__main__":

start_time = datetime.datetime.now()

main()

end_time = datetime.datetime.now()

print("Time: " + str(end_time - start_time))

Recommended Posts